Why the Modern Data Stack Now Ends in Apps

The modern data stack solved storage and transformation. The last mile—turning data into action—still hurts. Data apps are the missing piece.

Key Takeaways

The modern data stack excels at centralizing, transforming, and governing data, but teams still struggle to turn that data into action.

The "last mile" problem persists: emailed CSVs, fragile dashboards, and one-off tools that never quite fit the workflow.

Data apps—reusable, code-backed tools that embed data into decisions and automations—are the natural endpoint of the stack.

AI agents now make it possible for PMs, analysts, and operators to build that last mile themselves, without waiting on engineering.

The true value of your data infrastructure is realized when the people closest to problems can quickly turn data into tools, not just reports.

The modern data stack was supposed to change everything. And in many ways, it did.

Over the past decade, teams have rebuilt their data infrastructure from the ground up. Cloud warehouses like Snowflake, BigQuery, and Databricks replaced brittle on-prem systems with elastic, SQL-friendly storage. ELT pipelines flipped the old extract-transform-load model on its head, letting teams land raw data first and transform it later with tools like dbt and Fivetran. BI platforms got smarter. Data catalogs and lineage tools improved governance. The plumbing, finally, started to work.

And yet.

Ask anyone who works with data day-to-day—product managers, analysts, operations leads, even data engineers—and you'll hear a familiar frustration. The infrastructure is better than ever, but the last mile is still painful. Getting data into the warehouse is solved. Getting value out of it? That's where teams still struggle.

The Last Mile Problem

We've talked to hundreds of teams running modern data stacks. The pattern is remarkably consistent. They've invested heavily in infrastructure. They have clean, well-modeled data in their warehouse. Their dbt project is a thing of beauty. And then, when it comes time to actually use that data in a business process, something breaks down.

The finance team needs a scenario-planning tool that pulls from the warehouse and lets them model different revenue assumptions. Instead, they get a static dashboard and a monthly export to Excel.

The customer success team wants a health console that combines product usage, billing data, and support tickets into a single view they can act on. Instead, they juggle three different tools and a shared Google Sheet.

The operations team needs to trigger a workflow when a metric crosses a threshold—say, flagging accounts at risk of churn or alerting when inventory drops below a safety stock. Instead, someone checks a dashboard every morning and sends a Slack message manually.

These aren't edge cases. They're the norm. According to Gartner, poor data literacy and the inability to effectively use data for decision-making remain top barriers to realizing value from analytics investments. The stack is modern. The outcomes are often still manual, fragile, and slow.

The root cause isn't the warehouse or the transformation layer. It's what happens after. Teams have two choices: rely on dashboards and reports (which are passive, read-only, and disconnected from workflows), or build custom internal tools (which require engineering resources that are perpetually over capacity).

Neither option scales. Dashboards inform but don't enable action. Custom tools take months to build and years to maintain. The result is a gap between data infrastructure and data impact—a last mile that never gets paved.

The Natural Endpoint: Data Apps

We believe the modern data stack doesn't end at BI. It ends at apps.

Not consumer apps. Not SaaS products. Data apps: reusable, code-backed tools that sit on top of your warehouse or data streams and embed data directly into workflows, decisions, and automations.

A data app combines three ingredients: data (from your warehouse, APIs, or files), logic (queries, transformations, business rules, even ML models), and a surface for humans or systems to use that logic repeatedly. That surface might be an interactive UI—a dashboard, an internal tool, a review queue. Or it might be a headless pipeline: an ETL job, a scheduled report, an API that triggers downstream systems. Or it might be a conversational workflow where you explore and iterate on data without publishing anything at all.

The point is that data apps are where data becomes operational. They're not just reports you look at—they're tools you use. And unlike dashboards, they can be tailored to the exact shape of a workflow, updated as requirements change, and wired into the systems where work actually happens.

Consider a few examples:

A CSM health console. Customer success teams need to see the full picture for each account: product usage trends, billing status, open support tickets, recent NPS scores. A dashboard can show some of this, but a data app can combine it into a single view, highlight accounts at risk, and let CSMs take action—logging notes, triggering outreach, updating status—without leaving the tool.

A revenue scenario app for finance. Instead of exporting data to Excel and manually modeling assumptions, a finance team can use a data app that pulls live data from the warehouse, lets users adjust variables (growth rate, churn, expansion), and instantly recalculates projections. The app becomes a reusable tool, not a one-time spreadsheet.

An operational alerting tool. When a key metric crosses a threshold—inventory below safety stock, support queue exceeding SLA, conversion rate dropping—a data app can detect it, notify the right people, and even trigger downstream workflows: creating a ticket, paging on-call, or kicking off a replenishment order.

These aren't hypotheticals. They're the kinds of tools teams need every day. The problem is that building them has traditionally required engineering effort that's hard to justify for internal use cases.

Who Builds the Last Mile?

This is where the economics of the modern data stack start to break down.

Data teams are great at building infrastructure. Engineering teams are great at building products. But the last mile—the custom tools that connect data to specific workflows—often falls into a gap. Data teams don't have the frontend skills to build rich UIs. Engineering teams are focused on customer-facing products. And the people closest to the problem—PMs, analysts, operations leads—lack the technical resources to build what they need.

The result is a backlog that never shrinks. Internal tool requests sit for months. Analysts build fragile workarounds in spreadsheets. Business teams adapt their processes to fit the tools they have, rather than the tools they need.

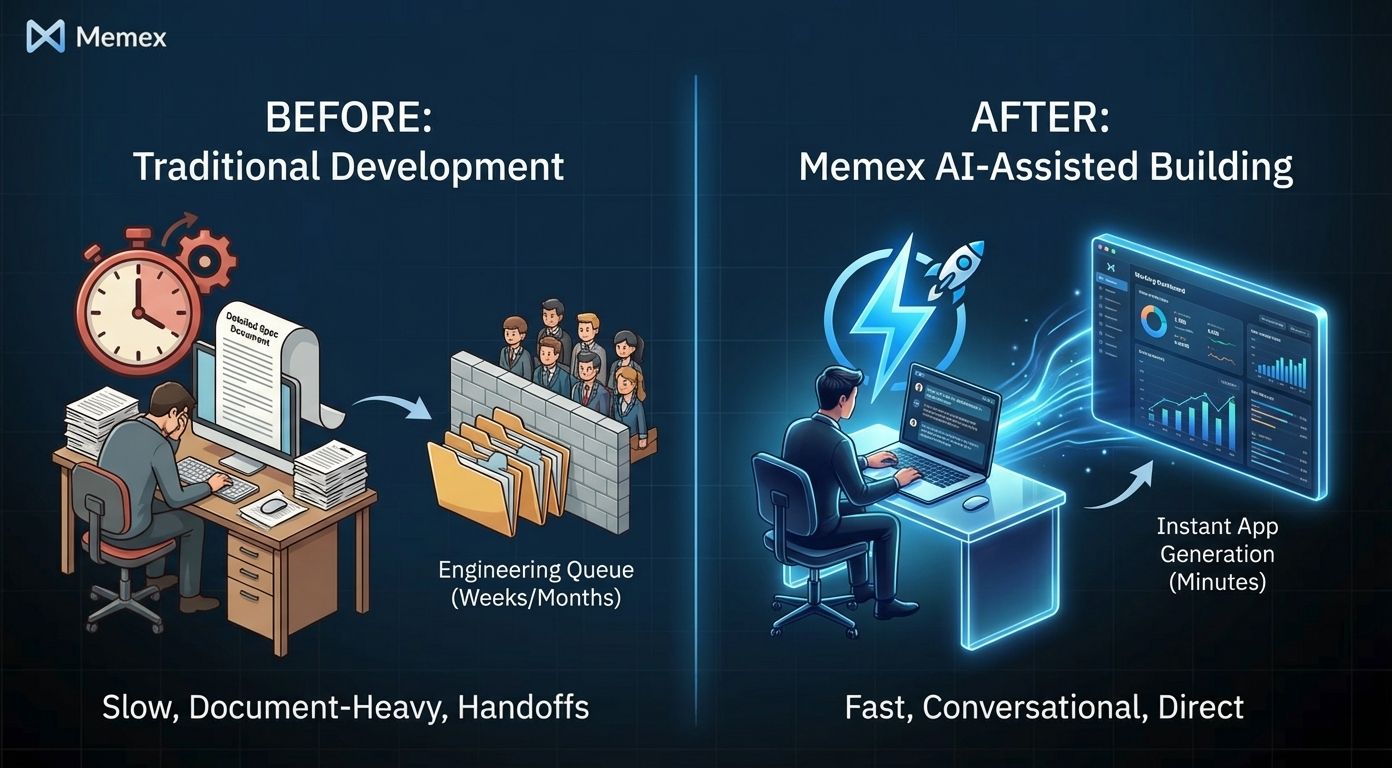

This is changing. AI agents are rewriting who can build software.

Tools like Memex are designed to let anyone who understands their data and their problem build the tools they need—without writing code from scratch, without waiting on engineering, and without settling for a dashboard that doesn't quite fit.

How AI Agents Change the Equation

At Memex, we've watched thousands of users build data apps that would have been impossible for them a year ago. Not because the technology didn't exist, but because the skills required were too specialized.

A product manager who needed a customer health console built it in an afternoon. A financial analyst who wanted a scenario-planning tool created one without touching Excel. An operations lead who needed automated alerting wired it up in hours, not weeks. These aren't developers. They're domain experts who understand their data and their workflows—and now have a way to turn that understanding into working software.

The shift is profound. When AI agents handle the mechanics of coding, debugging, and deployment, the bottleneck moves from "who can build it" to "who understands the problem." And the people closest to the problem are usually not engineers. They're the PMs, analysts, and operators who live in the data every day.

We wrote about this shift in our post on how to build data pipelines through conversation. The insight is simple: if an agent can plan, write, run, and debug code autonomously, then anyone who can describe what they want can build what they need.

Connecting to Your Existing Stack

One concern we hear often: "We've already invested in Snowflake / BigQuery / dbt / Fivetran / Looker. Do we need to rip anything out?"

No. Data apps sit on top of your existing infrastructure. They consume the warehouse as a data source. They use dbt models as the foundation for queries. They complement BI tools by handling the use cases dashboards can't.

Memex, for example, can connect securely to your warehouse, pull from your dbt models, or hit the APIs your data team has already built. You can explore and validate data through conversation—asking questions, running queries, iterating on logic—before committing to a build. And when you're ready, Memex can generate both UI-based apps (internal tools, dashboards, admin panels) and headless pipelines (ETL jobs, scheduled scripts, API services).

This matters because the modern data stack is modular. You don't need a monolithic platform; you need tools that interoperate. Data apps are a new layer in the stack, not a replacement for it.

Memex reads from your existing infrastructure, helps you build on top of it, and deploys apps so your team can use them day-to-day.

From Reports to Tools

Here's the strategic shift we're advocating: stop thinking of your data stack as something that produces reports. Start thinking of it as something that produces tools.

Reports are passive. They inform, but they don't enable action. They require a human to read, interpret, and then do something in another system. The loop is slow and error-prone.

Tools are active. They embed data directly into workflows. They let users take action—updating records, triggering processes, modeling scenarios—without leaving the interface. The loop is fast and integrated.

The modern data stack was designed to make data accessible. Data apps are what make data usable. They're the missing piece that turns infrastructure investment into operational impact.

And now, with AI agents handling the build, the people closest to the problems can create those tools themselves. The PM who knows exactly what the CSM team needs can build the health console. The analyst who understands the revenue model can build the scenario app. The ops lead who knows the workflow can build the alerting system.

The value of your data stack is realized when it's not just engineers who can build on top of it. It's realized when anyone with a problem and access to the data can turn that data into a tool—fast.

Getting Started

If you're running a modern data stack and feeling the last-mile pain, here's our advice: start small. Pick one workflow where your team is currently exporting CSVs, maintaining a fragile spreadsheet, or wishing they had an internal tool.

Then try building it as a data app. Connect to your warehouse. Describe what you need. Let an AI agent handle the mechanics. See how fast you can go from idea to working tool.

That's what Memex is built for. We help teams turn any data—from a warehouse to an API to a CSV—into working apps. Dashboards, workflows, automations, internal tools. We handle the full lifecycle: connecting to data, planning the build, writing the code, debugging, and deploying. You own the code. You can modify it. And your team can use the result day-to-day.

The modern data stack was a decade-long investment in infrastructure. Data apps are how that investment pays off.

If you want to see what's possible, try Memex for free. Or join our Discord community to see what others are building and share your own use cases.

Frequently Asked Questions

What is the "last mile" problem in the modern data stack?

The last mile problem refers to the difficulty of turning centralized, well-modeled data into actionable tools and workflows. Even with excellent data infrastructure, teams often resort to manual processes, emailed spreadsheets, or fragile dashboards because building custom internal tools requires engineering resources that are scarce.

How are data apps different from dashboards and BI tools?

Dashboards are passive: they display data for humans to read and interpret. Data apps are active: they embed data into workflows, let users take action, and can trigger automations. A dashboard shows you a metric; a data app lets you do something about it.

Do I need to replace my existing data stack to build data apps?

No. Data apps sit on top of your existing infrastructure. They connect to your warehouse (Snowflake, BigQuery, Databricks), use your dbt models, and complement your BI tools. They're a new layer in the stack, not a replacement for it.

Who can build data apps with AI tools like Memex?

Anyone who understands their data and their problem. Memex is designed for tech-adjacent professionals—PMs, analysts, operations leads, data scientists—who work heavily with data but aren't full-time developers. The AI agent handles the coding, debugging, and deployment.

What kinds of data apps can I build with Memex?

Memex supports a wide range: interactive UIs (dashboards, internal tools, admin panels), headless pipelines (ETL jobs, scrapers, scheduled reports), and conversational workflows where you explore and iterate on data without publishing an interface. If it wraps data, logic, and repeatability into something reusable, it's a data app.